Representation learning and multi-labelled classification

This work is motivated by recent work in the machine learning and computer vision communities that highlights the usefulness of feature learning for classification tasks. Our approach was applied for the JRS 2012 Data Mining Competition, which consisted in the automatic classification of 20000 multi-labeled biomedical reports. Our method achieved the fifth position out of more than 5000 submitted solutions and 126 active teams.

- Ryan Kiros, Axel J. Soto, Evangelos Milios, Vlado Keselj. “Representation learning for Sparse, High-Dimensional Multi-Label Classification”. In: Jing Tao Yao et al (Eds.). Lecture Notes in Computer Science, Springer-Verlag. 8th International Conference on Rough Sets and Current Trends in Computing 2012. August 17-20, 2012. Chengdu, China.[Paper]

Linear dimensionality reduction

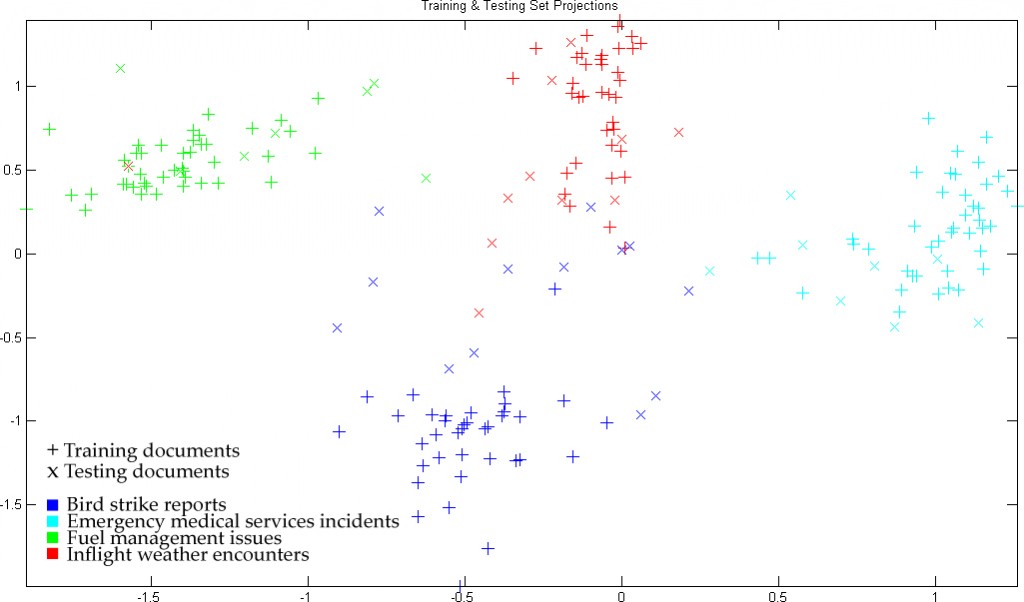

We showed that supervised linear dimensionality reduction methods allow a straightforward interpretation of the projected space, and it also improves pattern learning. We tried different approaches, being CMM the one that stepped out as the most consistent approach over all different metrics.

- Axel J. Soto, Marc Strickert, Gustavo E. Vazquez, Evangelos Milios. “Subspace Mapping of Noisy Text Documents”. In: Cory Butz, Pawan Lingras (Eds.) Lecture Notes in Artificial Intelligence, Vol. 6657, 377-383. Springer-Verlag Berlin Heidelberg. Canadian Conference on Artificial Intelligence 2011. May 25-27, 2011; St. John’s, Canada.[Paper]